The second week of my internship has been great! I have learned a great deal, met some extremely smart and creative people, and seen some cool things in and around LA.

To facilitate a better understanding of what I’m working on in this blog post, I will digress for a few moments explain a few aspects of virtual production that I, myself, have learned over the past week. One aspect that has been fascinating to learn is the technology behind the acquisition (aka recording) of motion data. As you may know, motion data is gathered by recording the motion of a set of markers in a 3D space, which are affixed to actors and/or objects. This data is acquired by a set of cameras in a process which uses infrared light and mathematics. Essentially, once activated, a set of cameras all emit infrared light into a space (aka “the volume”). The markers on the actors/objects are highly reflective spheres which are designed to reflect light directly back to it’s source, so once the infrared waves hit these markers, they’re reflected directly back into the cameras. However, this alone does not tell the camera where the marker is in space, because mathematically, one line will not define the depth of this marker. Once multiple cameras all recognize the same marker (with intersecting lines), they can communicate with each other, and geometry and triangulation is used to help identify the marker’s location relative to the cameras. Digital Domain’s main stage has 122, 16 megapixel motion capture cameras that do this, each with their own on-board processors to gather the data communicate with each other.

This overview pertains specifically to what I did on Monday morning with my mentor Greg LaSalle (though I’ll be working with this tech for most of my internship). That morning, when we arrived to the studio, Greg and I re-calibrated the entire camera system as routine preparation for a shoot next week which will occur on that stage. We shut down and rebooted the entire system, then had to go through a unique process to help the cameras identify where they were in this space and build and framework around that. To do this, I walked around the volume waving a set of markers on a rod which allowed the cameras to understand their positions relative to one another, and create a framework from there. This is done prior to every shoot because camera orientation may be slightly shifted due to accidental contact between shoots and periodic reboots keeps the system running as efficiently as possible.

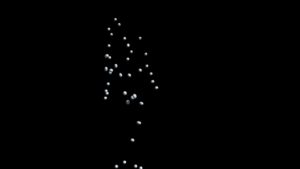

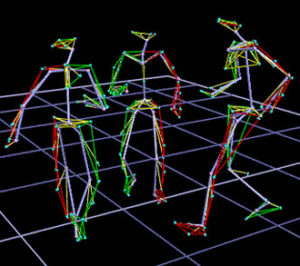

Later that day, I also began to learn how to use the marker data processing software which allows employees to take in the primitive marker clouds (the raw data taken by the cameras), and label the markers to create a workable skeleton. The software to do this is called Shogun Post.

In these generic photos, you can see the raw marker data on the left, and the labeled marker data on the right. Once the markers have been labeled like so, artists can go through and “clean” the motion data to avoid jumps or unnatural shakiness to make it as smooth as possible before sending it to the animation department where the character model is attached to the motion of the actor model. Then finally, artists will add lighting, texture, and other finishing effects before it’s delivered to the studio!

At the end of the day on Monday, Greg and I biked about a half an hour to the Technicolor Offices in Culver City to try a new VR experience being demo’d there. It was a very impressive experience, as it was a simulation that incorporated the typical VR headset and headphones, but also utilized smells and haptic feedback to tell their story. The website for the project can be found here: https://www.treeofficial.com/

Tuesday was a short day at DD. For the first half of the day, my time was spent furthering my experience with both Maya (3D modeling software) and Shogun Post (marker processing software). Greg and I took then took the afternoon off to go to downtown Hollywood and meet some of his friends there for live music in the evening.

On Wednesday, I spent a couple of hours on Shogun Post in the morning just to try to get more familiar with the software. After an early lunch, a few coworkers and I flipped on the England vs Croatia World Cup semifinal and watched the second half of the game on a big monitor on the stage. That afternoon, however, I got the great opportunity to spend some time with Doug Roble, Senior Director of Software R&D at Digital Domain. It was extremely eye-opening to be able to discuss not only what he does at DD, but what the future of MoCap looks like in the entertainment industry. One thing in particular from our conversation that struck me as fascinating was the huge role that mathematics played in his software development. I hadn’t put much though into it before, but as he walked me through the future of this tech, I was really excited to see how most of the software that’s being developed is grounded in Linear Algebra and Calculus, among other emphases. I can’t really speak specifically to what DD is currently working on, but what I can say is that the future of this technology is dependent on “machine vision” and “machine learning” to capture performances and environments in high detail without the need of any physical markers.

Thursday, I got the opportunity to sit in on a couple of professional meetings to plan the logistics for a small shoot next week, as well as prepping the main MoCap stage for this shoot and another in two weeks. This prep included cleaning up the client area, where guests can sit, direct performances, and review footage, and also included rearranging equipment on other parts of the stage. It also consisted of gathering the necessary props and marking and labeling them. It was real-world shoot preparation. One fun part was that some of the props we were prepping were the same ones used in Steven Spielberg’s Ready Player One! For a short promo about the making of Ready Player One right here in the DD’s facility, see the video at this link:

https://uploadvr.com/watch-steven-spielberg-use-an-htc-vive-to-direct-ready-player-one/

My workstation can actually be seen just to the left of the stage in the first photo!

Now Friday was the most exciting day so far, when I got the chance to suit up in a full MoCap suit and capture data to be used for test footage in a bid for upcoming work. After suiting up and going through some rehearsals, I completed a set of ROM’s (Range of Motion), then we were ready to capture. We worked for about 45 minutes and it was a blast. You can see me in the suit below. You night notice that I did not have a full helmet camera on, meaning that were not to capturing facial data, just body data.  In the next photo, with the lights turned out and the flash on my camera turned on, you can see the reflectivity of the markers on my suit.

In the next photo, with the lights turned out and the flash on my camera turned on, you can see the reflectivity of the markers on my suit.

I also collected some data of me running ( as I am a a passionate runner), so I could go through and see what that raw data looks like in post.

Friday evening, Greg, his friend, and I went to see an improv-musical live show in Hollywood which was really funny and quite well done. The show was called Opening Night (because every night is different so every night is “opening night” of that instance) and has been running every Friday since 1998.

There are no comments published yet.